- Published on

Introduction to CNNs (Speech Stamps)

- Authors

- Name

- Martin Andrews

- @mdda123

Presentation Link

Sam Witteveen and I started the TensorFlow and Deep Learning Singapore group on MeetUp back in February 2017, and the group has grown significantly over the last ~12 months. However, that also means that many of the people who have recently joined missed out on our early sessions. So we’re re-running some of the earlier events as “Replay” “Back-to-Basics” events, hoping that new people will be able to ‘catch up’ and get more out of coming to the main graoup events.

Our second ‘Back-to-Basics’ Meeting was supported by SGInnovate, in their office on Carpenter St.

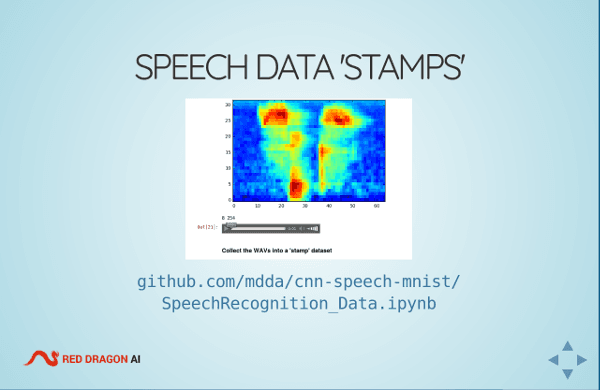

As in the original March talk last year, I presented an introduction to CNNs, which are typically presented as a vision solution, using MNIST as an example. However, my version has a bit of a twist : Instead of using visual digits, I have created a spoken-word dataset (the digits 0 to 9, of course), and the CNN is trained to recognise spectrograms of the audio - i.e. the CNN is doing voice recognition!

As an added bonus, there’s also an ‘animals’ audio dataset, and the demo notebook includes an illustration of transfer learning : Where animal names are learned solely by modeling the errors made by the network trained to recognise digits. Fortunately, during the demo, the transfer-learned model scored 4/4 on the test animal name examples (YMMV).

The source for the CNN ‘Stamps’ Speech Recognition model is available on GitHub - if you have questions on the software, please leave an ‘issue’ there.

If there are any questions about the presentation please ask below, or contact me using the details given on the slides themselves.