- Published on

Reinforcement Learning for Long-Horizon Multi-Turn Search Agents

- Authors

- Name

- Martin Andrews

- @mdda123

This paper was accepted to the NeurIPS 2025 Workshop on Multi-Turn Interactions in Large Language Models at NeurIPS 2025 in San Diego, California, USA.

Abstract

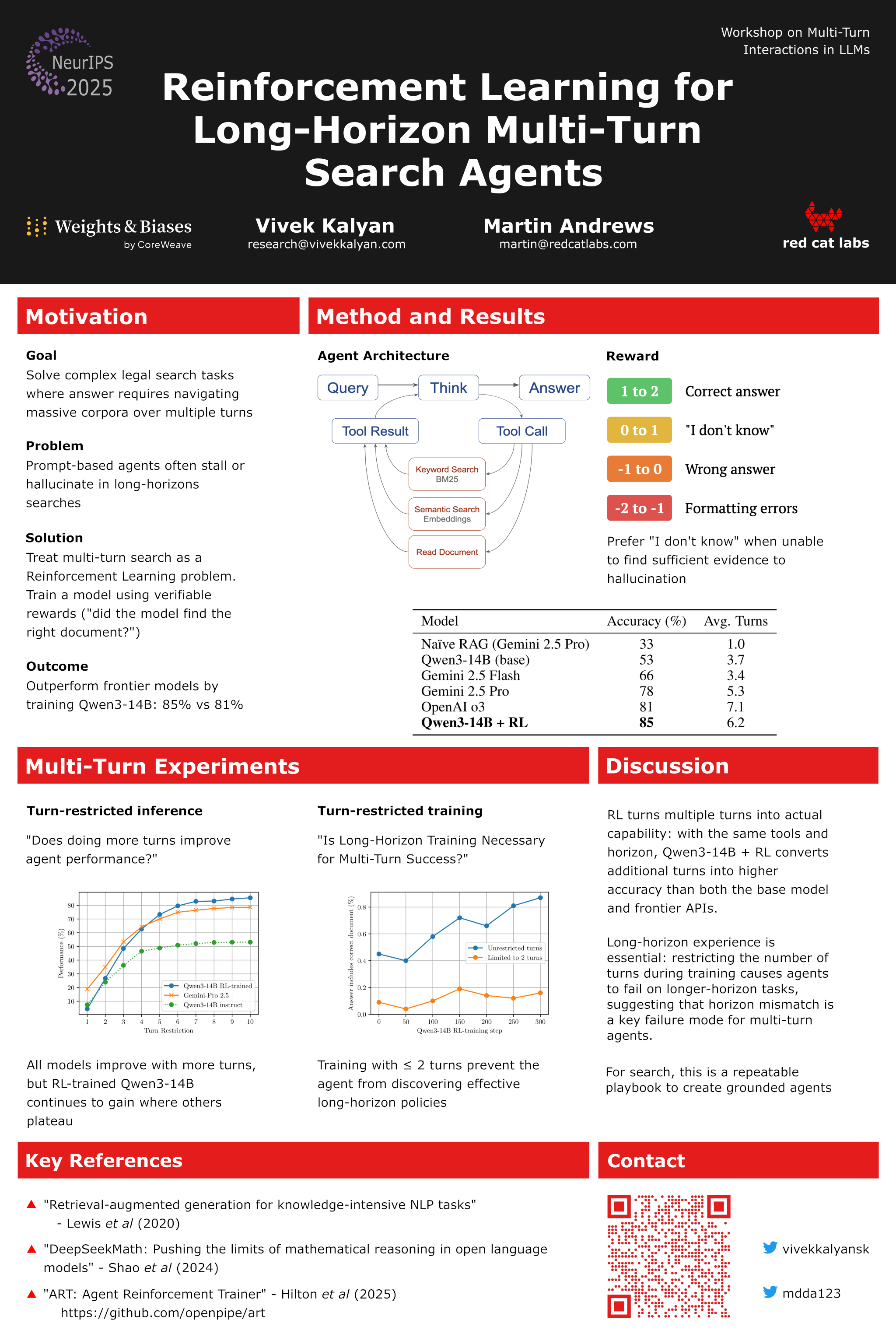

Large Language Model (LLM) agents can leverage multiple turns and tools to solve complex tasks, with prompt-based approaches achieving strong performance. This work demonstrates that Reinforcement Learning (RL) can push capabilities significantly further by learning from experience. Through experiments on a legal document search benchmark, we show that our RL-trained 14 Billion parameter model outperforms frontier class models (85% vs 78% accuracy). In addition, we explore turn-restricted regimes, during training and at test-time, that show these agents achieve better results if allowed to operate over longer multi-turn horizons.

Poster Version

Link to Paper

BiBTeX entry for the arXiv version (which has been updated after the workshop to reflect discussions there, etc):

@misc{kalyan2025multiturnagenticrag,

title={{Reinforcement Learning} for Long-Horizon Multi-Turn Search Agents},

author={Vivek Kalyan and Martin Andrews},

year={2025},

eprint={2510.24126},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2510.24126},

}

Acknowledgements

Support for this research was provided by the Google AI Developer Programs team, including access to the Gemini models and GPUs on Google Cloud Platform.