- Published on

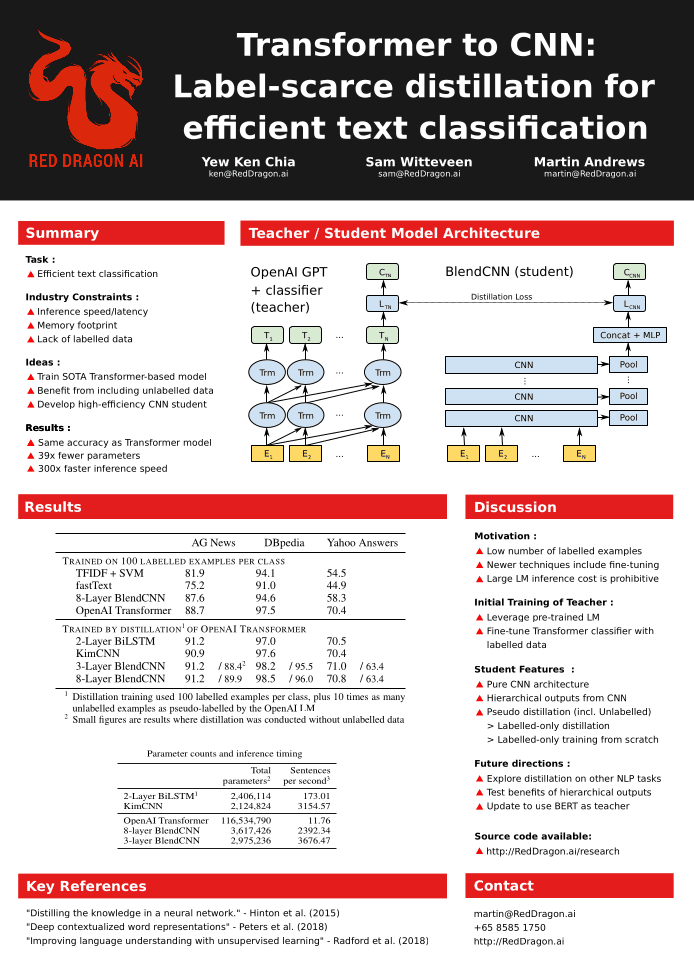

Transformer to CNN: Label-scarce distillation for efficient text classification

- Authors

- Name

- Martin Andrews

- @mdda123

This paper was accepted to the CDNNRIA workshop at NIPS-2018 in Montréal, Canada.

Abstract

Significant advances have been made in Natural Language Processing (NLP) modelling since the beginning of 2018. The new approaches allow for accurate results, even when there is little labelled data, because these NLP models can benefit from training on both task-agnostic and task-specific unlabelled data. However, these advantages come with significant size and computational costs.

This workshop paper outlines how our proposed convolutional student architecture, having been trained by a distillation process from a large-scale model, can achieve 300x inference speedup and 39x reduction in parameter count. In some cases, the student model performance surpasses its teacher on the studied tasks.

Poster Version

Link to Paper

And the BiBTeX entry for the arXiv version:

@article{DBLP:journals/corr/abs-1909-03508,

author = {Yew Ken Chia and

Sam Witteveen and

Martin Andrews},

title = {Transformer to {CNN:} Label-scarce distillation for efficient text

classification},

journal = {CoRR},

volume = {abs/1909.03508},

year = {2019},

url = {http://arxiv.org/abs/1909.03508},

eprinttype = {arXiv},

eprint = {1909.03508},

timestamp = {Tue, 17 Sep 2019 11:23:44 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-1909-03508.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}