- Published on

RETRO vs LaMDA - Clash of the Transformers

- Authors

- Name

- Martin Andrews

- @mdda123

Presentation Link

Sam Witteveen and I started the Machine Learning Singapore Group (or MLSG for short) on MeetUp in February 2017 (it was previously named “TensorFlow and Deep Learning Singapore”), and the thirty-sixth MeetUp was our first in 2022, and was inspired by our attendence at the NeurIPS conference in December.

The meetup was titled “NeurIPS and the State of Deep Learning”, and was in two parts:

“State of Generative Modeling” - Sam :

- Current state of Generative Modeling, including :

- New GAN techniques

- Diffusion approaches

- MultiModal models

- .. and other interesting forms of generative modeling

- Current state of Generative Modeling, including :

“RETRO vs LaMDA : Clash of the Transformers” - Martin :

- Google released a paper about the LaMDA dialog model last night…

- So this talk supercedes the “Sparsity and Transformers” talk that was previously on the line-up

- The talk covered previous iterations of ‘large LMs’

- And then explored DeepMind’s new RETRO model

- And Google’s (very) new LaMDA model - including

- The overall model structure, datasets and training

- The focus on safety and groundedness

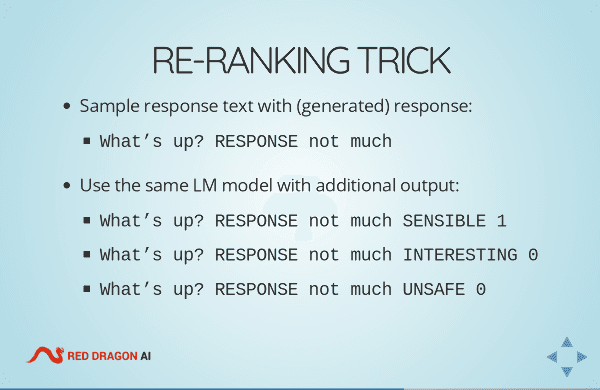

- The ‘tricks’ used to enable fine-tuning data to significantly improve its performance

- Google released a paper about the LaMDA dialog model last night…

There’s a video of me doing the talk on YouTube. Please Like and Subscribe!

The slides for my talk are here :

If there are any questions about the presentation please ask below, or contact me using the details given on the slides themselves.

GCP Discussion

In the discussion / Q&A session after the two talks, one topic that arose was how to migrate from the somewhat quirky Colab plaform, to something based on GCP - so I pointed to my blog post to do exactly that, and explained that this ‘GCP machine as local GPU’ worked so well that I had recently sold my local GPU (Nvidia Titan X 12Gb, Maxwell), and migrated onto GCP. One benefit (apart from all-in cost) has also been the ability to seamlessly upgrade to a larger GPU set-up once the code works, without having to make an infrastructure changes (i.e. disk can be brought up on a larger machine near instantly).

I should also give a shout-out to Google for helping this process by generously providing Cloud Credits to help get this all implemented.