- Published on

Did the Model Understand the Question?

- Authors

- Name

- Martin Andrews

- @mdda123

Presentation Link

Sam Witteveen and I started the TensorFlow and Deep Learning Singapore group on MeetUp in February 2017, and the eighteenth MeetUp, aka TensorFlow and Deep Learning: Explainability and Interpretability, was again hosted by Google Singapore.

To some extent the theme of the MeetUp was set by Lee XA volunteering to talk about “Explainable AI : Shapley Values and Concept Activation Vectors”, based on his experiences with Shapley values from Scott M. Lundberg NIPS 2016 paper titled “A Unified Approach to Interpreting Model Predictions” on a transfer learned model of a CNN.

Before that though, in order to set the scene, we had a talk “Explainability : An Overview” by Hardeep Arora, which looked at the explainability issue coming from the direction of ‘regular’ datascience.

We also had an excellent last-minute lightning talk by Timothy Liu about implementing machine CPU/GPU sharing using KubeFlow, orchestrating a collection of Docker containers delivering Jupyter.

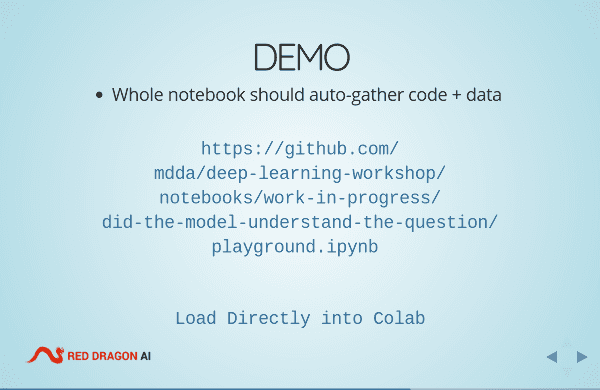

For my part, I gave a talk titled “Did the Model Understand the Question?”, which explained some of the thinking in the paper by the same title by Mudrakarta et al (2018).

The slides for my talk are here :

If there are any questions about the presentation please ask below, or contact me using the details given on the slides themselves.