- Published on

Language Model Assisted Explanation Generation

- Authors

- Name

- Martin Andrews

- @mdda123

This paper for the workshop Shared Task was accepted to the Textgraphs-13 workshop at EMNLP-IJCNLP-2019 in Hong Kong.

Abstract

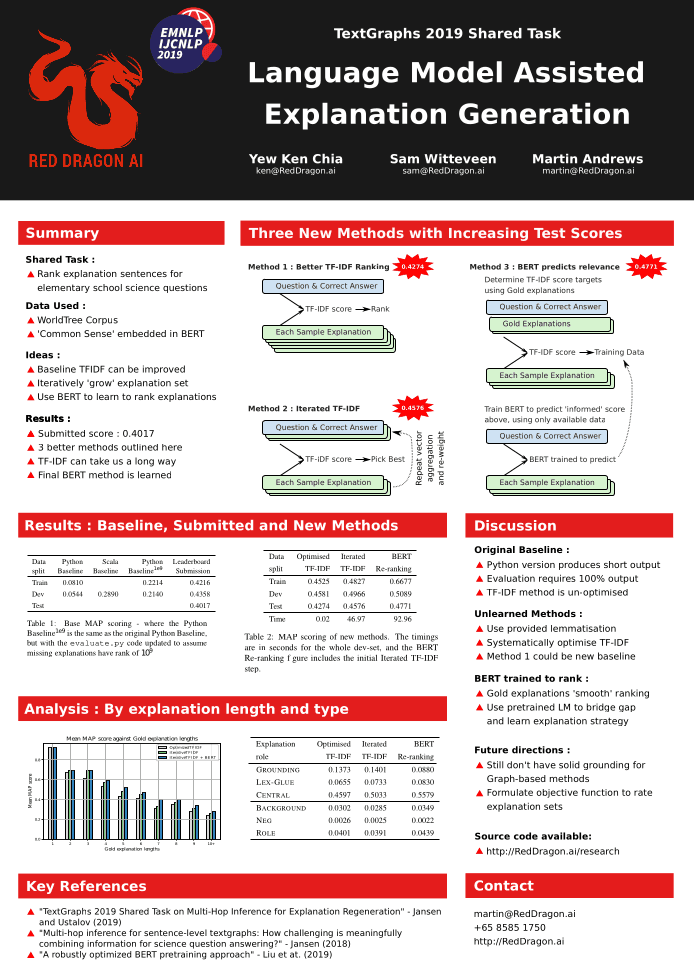

The TextGraphs-13 Shared Task on Explanation Regeneration asked participants to develop methods to reconstruct gold explanations for elementary science questions. Red Dragon AI's entries used the language of the questions and explanation text directly, rather than a constructing a separate graph-like representation. Our leaderboard submission placed us 3rd in the competition, but we present here three methods of increasing sophistication, each of which scored successively higher on the test set after the competition close.

Poster Version

Link to Paper

And the BiBTeX entry for the arXiv version:

@article{DBLP:journals/corr/abs-1911-08976,

author = {Yew Ken Chia and

Sam Witteveen and

Martin Andrews},

title = {Red Dragon {AI} at TextGraphs 2019 Shared Task: Language Model Assisted

Explanation Generation},

journal = {CoRR},

volume = {abs/1911.08976},

year = {2019},

url = {http://arxiv.org/abs/1911.08976},

eprinttype = {arXiv},

eprint = {1911.08976},

timestamp = {Tue, 03 Dec 2019 14:15:54 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1911-08976.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}