- Published on

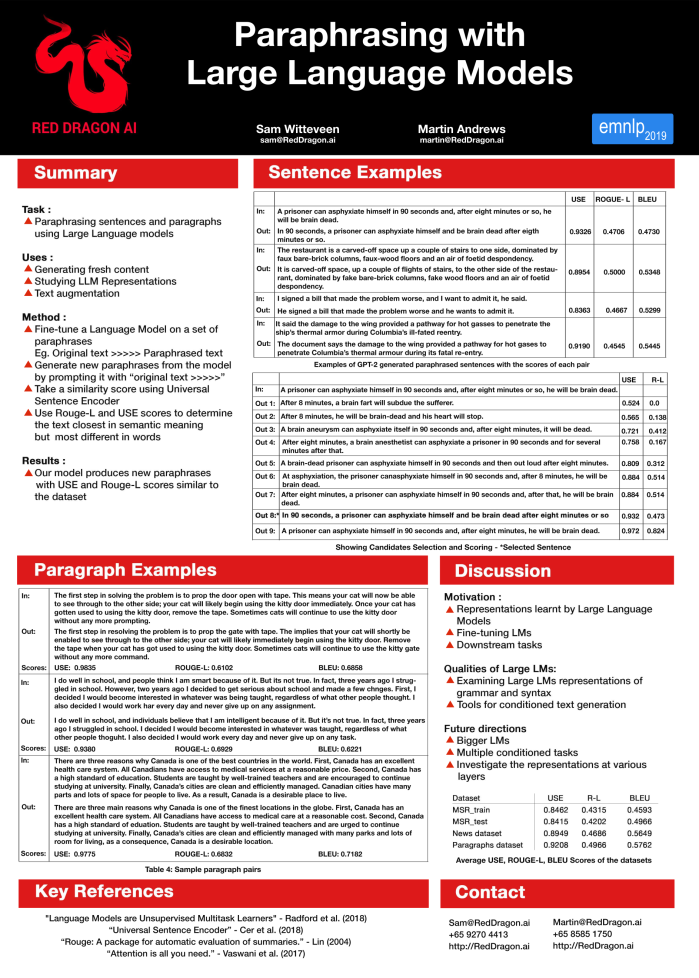

Paraphrasing with Large Language Models

- Authors

- Name

- Martin Andrews

- @mdda123

This paper was accepted to the WNGT workshop at EMNLP-IJCNLP-2019 in Hong Kong.

Abstract

Recently, large language models such as GPT-2 have shown themselves to be extremely adept at text generation and have also been able to achieve high-quality results in many downstream NLP tasks such as text classification, sentiment analysis and question answering with the aid of fine-tuning. We present a useful technique for using a large language model to perform the task of paraphrasing on a variety of texts and subjects. Our approach is demonstrated to be capable of generating paraphrases not only at a sentence level but also for longer spans of text such as paragraphs without needing to break the text into smaller chunks.

Poster Version

Link to Paper

And the BiBTeX entry for the arXiv version:

@article{DBLP:journals/corr/abs-1911-09661,

author = {Sam Witteveen and

Martin Andrews},

title = {Paraphrasing with Large Language Models},

journal = {CoRR},

volume = {abs/1911.09661},

year = {2019},

url = {http://arxiv.org/abs/1911.09661},

eprinttype = {arXiv},

eprint = {1911.09661},

timestamp = {Tue, 03 Dec 2019 14:15:54 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-1911-09661.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}